What Exactly Is Deep Learning AI?

Deep learning, a subset of machine learning, is based on artificial neural networks with representation learning. This AI-based technique allows computers to mimic the processing capabilities of the human brain when dealing with data. Intricate patterns within images, text, audio, and diverse datasets can be discerned using deep learning models, yielding precise insights and predictions.

The term “deep” in deep learning refers to the incorporation of multiple network layers. These layers are made up of artificial neurons, or nodes, that are arranged in three layers: the input layer, the hidden layers, and the output layer. Data flows through each layer, with the output of one layer serving as the input for the next layer.

Deep learning algorithms learn by example and have found applications in fields as diverse as computer vision, speech recognition, natural language processing, machine translation, bioinformatics, drug design, medical image analysis, climate science, material inspection, and board game programmes. Surprisingly, deep learning has demonstrated outcomes that rival or exceed human performance in certain scenarios.

What’s the relation between Deep Learning, Machine Learning, and Artificial Intelligence?

Within the vast field of computer science, deep learning, machine learning, and artificial intelligence form a cohesive trio. The overarching concept is artificial intelligence (AI), which encompasses the development of intelligent machines capable of autonomous task execution. Machine learning (ML), a subset of AI, focuses on the development of algorithms that allow machines to learn from data and gradually improve their performance. Deep learning (DL) is a subset of machine learning that uses artificial neural networks to model intricate data patterns and relationships.

Simply put, deep learning is a subset of machine learning, and machine learning is a subset of artificial intelligence. The relationship between these concepts can be represented by concentric circles, with AI encompassing the largest circle, machine learning following, and deep learning following.

Natural language processing, computer vision, and robotics are examples of AI technologies and methods. As a subfield of AI, machine learning automates the process of learning from data and making predictions or decisions based on that data. Deep learning, a subfield of machine learning, uses artificial neural networks to process and analyse massive amounts of data, enabling machines to recognise complex patterns and make more accurate predictions.

To summarise, deep learning is a specific methodology within the domain of machine learning, which itself is part of the larger landscape of artificial intelligence. These interconnected technologies work together to enable the creation of intelligent systems capable of autonomous operation, data-driven learning, and continuous performance improvement.

Deep Learning’s Importance in Today’s World

Deep learning has become very important in today’s world because it has revolutionized many industries and applications. Deep learning, a subset of machine learning, models’ complex patterns and relationships in data using artificial neural networks, allowing machines to recognise complex patterns and make more accurate predictions.

Some of the key reasons deep learning is important in today’s world include:

Greater accuracy and efficiency: Deep learning models can process large amounts of data in real time and provide real-time insights, resulting in greater accuracy and efficiency in a variety of applications such as healthcare diagnostics, fraud detection, and natural language processing.

Automation: Deep learning allows for the automation of previously human-only tasks such as image recognition, speech translation, and customer relationship management. This automation can save businesses time and resources, allowing them to focus on other strategic areas.

Cost-effectiveness: Deep learning can help businesses save money by automating tasks, utilising unstructured data, and adapting to growing amounts of data. It can also detect flaws in products and code errors, lowering the costs of product-related accidents and recalls.

Innovation: Deep learning has enabled ground-breaking advances in a variety of fields, including self-driving cars, virtual assistants, and intelligent gaming. These innovations have the potential to transform industries and improve people’s quality of life.

Addressing complex problems: Deep learning can solve complex problems that traditional machine learning algorithms cannot, such as natural language processing, computer vision, and speech recognition. Deep learning’s ability to handle complex tasks has made it an essential component of many AI applications.

Deep learning is critical in today’s world because of its ability to improve accuracy and efficiency, automate tasks, reduce costs, drive innovation, and address complex problems across a wide range of industries and applications.

Deep Learning Neural Network Fundamentals

Deep learning is built on neural networks. They are computational models of the human brain’s structure and function. A neural network is made up of interconnected layers of artificial neurons, or nodes. The nodes are divided into three layers: the input layer, the hidden layers, and the output layer. Each layer passes information through it, with the previous layer’s output providing input for the next layer.

Deep Learning Frameworks

Deep learning architectures have been developed to address a variety of tasks and problems. Among the most popular architectural styles are:

Classic Neural Networks

The simplest type of neural network, also known as feedforward neural networks. An input layer, one or more hidden layers, and an output layer comprise them. Each layer’s nodes are fully connected to the next layer’s nodes, and information flows only in one direction, from input to output.

Convolutional Neural Networks (CNNs)

These networks are designed specifically for processing grid-like data such as images. Convolutional layers, pooling layers, and fully connected layers comprise CNNs. Pooling layers reduce the spatial dimensions of the data, making the network more computationally efficient, while convolutional layers apply filters to the input data to detect local patterns.

RNNs (Recurrent Neural Networks)

RNNs are designed to process data sequences such as time series or natural language. They have node connections that form directed cycles, allowing them to keep a hidden state that can capture information from previous time steps. RNNs can model temporal dependencies in data using this architecture.

Long Short-Term Memory Networks (LSTMs)

LSTMs are a type of RNN that can deal with long-term dependencies in sequential data, making them ideal for tasks like language translation, speech recognition, and time series forecasting. LSTMs control the flow of information using a memory cell and gates, allowing them to selectively retain or discard information as needed.

These architectures can be combined and tailored to produce more complex and specialised deep learning models for a wide range of tasks and applications.

Neural Network Structure and Components

Neural networks are computational models of the human brain’s structure and function. They are made up of interconnected layers of artificial neurons known as nodes. A neural network’s structure is divided into three main layers: the input layer, the hidden layers, and the output layer.

- Input Layer: The neural network’s input data is represented by the input layer. Each node in the input layer represents a different feature of the dataset. In an image recognition task, for example, the input layer may include nodes for the image’s pixel values.

- Hidden Layers: The intermediate layers between the input and output layers are known as hidden layers. They compute on the input data and assist the neural network in learning complex patterns and relationships in the data. The number of hidden layers and nodes within each hidden layer can vary depending on the complexity of the problem and the neural network’s architecture.

- Output Layer: The output layer generates the final result or prediction based on the inputs. The output layer of a classification task may contain nodes representing different classes, whereas the output layer of a regression task may contain a single node representing the predicted value.

- Artificial Neurons: Artificial neurons, also known as perceptrons or nodes, are the basic building blocks of a neural network. They take one or more inputs, weight them, and then sum the weighted inputs. The sum is then passed through a non-linear activation function, which results in the neuron’s output or activation.

- Connections and Weights: A neural network’s connections represent the links between nodes in different layers. Each connection has an associated weight, which determines the connection’s strength. The neural network adjusts these weights during training to minimise the difference between its predictions and the actual target values.

- Activation Functions: Activation functions are non-linear functions that are applied to a neuron’s weighted sum of inputs. They introduce non-linearity into the neural network, allowing it to learn complex data patterns and relationships. Sigmoid, ReLU (Rectified Linear Unit), and tanh (hyperbolic tangent) are examples of common activation functions.

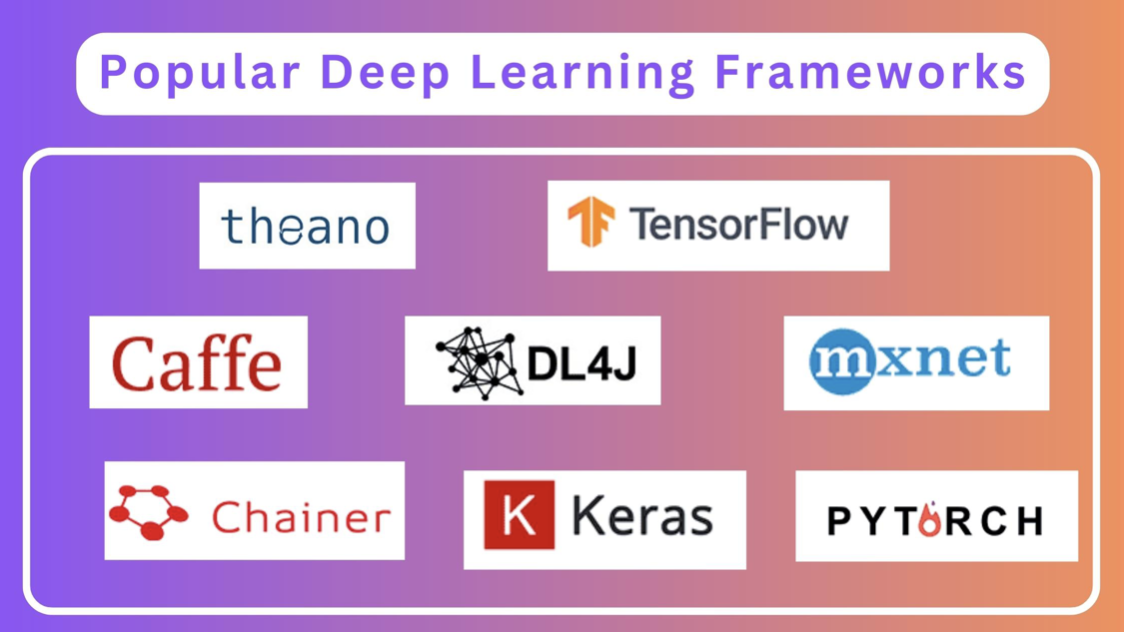

Deep Learning Frameworks That Are Popular

TensorFlow: Developed by Google, TensorFlow is an open-source library for numerical computation and large-scale machine learning. It supports languages like Python and R and uses dataflow graphs to process data. TensorFlow is widely used for implementing machine learning and deep learning models and algorithms.

Keras: Keras is a Google-developed high-level deep learning API for implementing neural networks. It is written in Python and works with a variety of back-end neural network computation engines, including TensorFlow, Theano, and others. Keras is designed to be user-friendly, modular, and extensible, making it simple to build and test deep learning models.

PyTorch: PyTorch is an open-source machine learning library based on the Torch library developed by Facebook’s AI Research team. It is intended to increase the flexibility and speed of deep neural network implementation. PyTorch is widely used by AI researchers and practitioners due to its ease of use and ability to switch between CPU and GPU computation.

Caffe: Convolutional Architecture for Fast Feature Embedding (Caffe) is a deep learning framework that supports various deep learning architectures, such as CNN, RCNN, LSTM, and fully connected networks. Caffe is popular for image classification and segmentation tasks due to its GPU support and out-of-the-box templates that simplify model setup and training.

Theano: Theano is an open-source numerical computation library for Python that allows developers to efficiently define, optimise, and evaluate multi-dimensional array-based mathematical expressions. While not a deep learning framework in and of itself, Theano is frequently used as a backend for other deep learning libraries such as Keras.

Deeplearning4j (DL4J): Deeplearning4j is a Java Virtual Machine (JVM) open-source distributed deep learning library. It supports multiple backends, including TensorFlow and Theano, and provides a high-level API for building and training neural networks.

Chainer: Chainer is a flexible and user-friendly deep learning framework that allows users to define and manipulate neural network models in Python on the fly. Chainer is compatible with a wide range of network architectures, including feedforward neural networks, recurrent neural networks, and convolutional neural networks.

MXNet: MXNet is an open-source deep learning framework that allows developers to create, train, and deploy deep neural networks using a variety of programming languages such as Python, R, Scala, and others. MXNet is built for efficiency and flexibility, making it ideal for a wide range of applications.

These deep learning frameworks provide a variety of features and capabilities that make it easier for developers and researchers to build and test deep learning models across a variety of tasks and applications.

Deep Learning Applications

Deep learning has numerous applications in a variety of industries. Among the most popular applications are:

- Healthcare: Deep learning is used in medical research, drug discovery, and medical imaging to diagnose life-threatening diseases such as cancer and diabetic retinopathy.

- Personalized Marketing: Deep learning algorithms can analyse customer data to create personalised marketing campaigns that increase customer engagement and sales.

- Financial Fraud Detection: Deep learning models can analyse large amounts of financial data to detect fraudulent activity and protect businesses from financial losses.

- Natural Language Processing (NLP): Deep learning is used in natural language processing (NLP) tasks such as sentiment analysis, machine translation, and text summarization to enable machines to understand and process human language.

- Autonomous Vehicles: Deep learning algorithms are used in the development of self-driving cars, allowing them to recognise objects, navigate roads, and make real-time decisions.

- Fake News Detection: Deep learning models can analyze text and identify patterns to detect and filter out fake news articles, ensuring the credibility of information.

- Facial Recognition: In facial recognition systems, deep learning is used for security, authentication, and identification.

- Recommendation Systems: Deep learning algorithms can analyse user preferences and behaviour to provide personalised product, service, and content recommendations.

- Speech Recognition: Deep learning models can process and analyse audio data in order to convert spoken language into text, allowing voice assistants and other applications to recognise and respond to human speech.

- Computer Vision: Deep learning is used in computer vision tasks like image classification, object detection, and facial recognition to help machines interpret and understand visual data.

These applications demonstrate deep learning’s versatility and potential for solving complex problems and improving various aspects of our lives.

Conclusion

Deep learning AI has transformed the field of artificial intelligence, allowing machines to learn and adapt in previously unthinkable ways. We are seeing ground breaking advances in areas such as natural language processing, computer vision, and autonomous systems as we continue to explore the potential of deep learning algorithms. These technological advances are not only transforming industries, but also improving our daily lives by providing us with smarter and more efficient tools.

However, as we embrace the power of deep learning AI, we must address the challenges and ethical concerns that it brings. Data privacy, bias reduction, and transparency in AI models are critical steps towards responsible AI development. We can harness the potential of deep learning AI to create a more connected, efficient, and intelligent world by encouraging collaboration among researchers, developers, and policymakers.

The possibilities for deep learning AI are vast and exciting as we look to the future. We can expect even more remarkable breakthroughs that will reshape the way we live, work, and interact with technology if research and innovation continue. It is our responsibility as a society to embrace these advancements while remaining focused on ethical and responsible AI development, ensuring that the benefits of deep learning AI are accessible and beneficial to all.